The GL-1130US is suitable for the recognition of road vehicles due to its MAX200Hz scanning frequency,

providing basic data for traffic flow surveys.

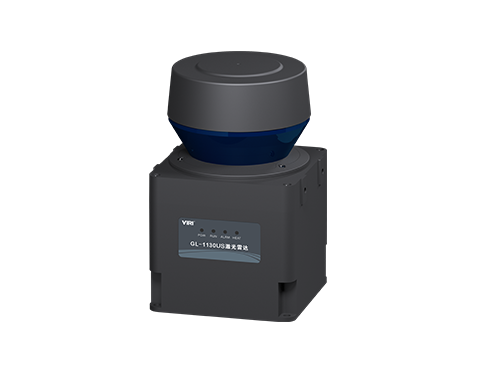

The GL-11 Series is a surveyed single-line LiDAR that supports both indoor and outdoor applications. Its excellent measurement capabilities, it can effectively measure distances up to 100 meters to targets with a reflectivity of 10%. The maximum scanning angle range is 270°, the scanning frequency is Max200Hz, and the angular resolution can reach Min0.125°. It adopts industrial-grade protection design which is suitable for scenarios with strict reliability requirements and high performance requirements such as highways, railways, electric power, ports/terminals and so on.

Emission frequency144000/288000Hz

Scanning frequency100Hz,200Hz

Scanning range30m@10%,65m@90%

Scanning angle270°

Light resistance grade80000lx

Angular resolution0.125°,0.25°,0.5°

Product Model

GL-1130US

Dimensions

(L x W x H)

Rear Interface:136×129×205.5mm; Bottom Interface:125×129×217mm

Weight

<2kg

Laser class

1,eye-safe (IEC 60825-1)

Laser source

905nm

Emission frequency

144K/288KHz

Operating range

65m@90%、30m@10%

Scanning frequency

100/200Hz

Aperture angle

270°

Angular resolution

0.125/0.25/0.5°

LIDAR (Light Detection and Ranging) typically utilizes a three-dimensional Cartesian coordinate system to describe the spatial positions of laser scan point cloud data. This coordinate system is often referred to as the LIDAR coordinate system or scanning coordinate system.

In the LIDAR coordinate system, the origin is typically located at the center of the LIDAR sensor, with the X-axis, Y-axis, and Z-axis representing different directions. The specific direction definitions may vary depending on the installation method and application scenario of the LIDAR sensor. For instance, in some cases, the X-axis may represent the forward direction of a vehicle, the Y-axis represents the lateral direction, and the Z-axis is perpendicular to the ground pointing upward. During the LIDAR scanning process, each laser point has a corresponding coordinate value in this coordinate system, which describes its position in three-dimensional space. By processing and analyzing these point cloud data, we can obtain three-dimensional information about the surrounding environment, such as terrain, buildings, vegetation, and so on.

"Lidar detection range is not far enough" indicates that the lidar system is unable to detect objects at sufficiently long distances. This limitation may be due to factors such as its power output, beam quality, receiver sensitivity, atmospheric conditions (e.g., fog, rain), scanning mode, or the reflectivity of the target objects. In applications that require long-range detection, a more powerful lidar system or alternative technologies may be needed to extend the detection range.

Data Synchronization: Firstly, it is necessary to ensure that the LiDAR and camera are synchronized in both time and space. This means they must be able to capture information from the same scene simultaneously, and the data needs to be precisely aligned in time. Feature Extraction and Matching: Camera tracking systems typically extract key feature points from video sequences, such as corner points, edge points, or texture points. These feature points exhibit good stability and distinguishability between frames, enabling their use in subsequent tracking and pose estimation. The LiDAR can provide 3D information about the scene, including the position and shape of objects. By fusing data from both sensors, more rich feature points can be extracted and matched more accurately. Fusion Algorithm: To achieve collaborative tracking between LiDAR and cameras, a fusion algorithm needs to be developed to integrate their data. This algorithm can utilize techniques such as probabilistic models and Kalman filters to fuse the 3D data from the LiDAR with the 2D image data from the camera, resulting in more accurate tracking results. Collaborative Work: During collaborative tracking, the LiDAR and camera need to work together. The LiDAR can provide depth information about the scene and the 3D positions of objects, helping the camera determine the precise location of target objects. The camera, on the other hand, can provide appearance information about the targets, such as color and texture, assisting the LiDAR in identifying target objects more accurately. Through their collaborative work, faster and more accurate tracking can be achieved. Optimization and Feedback: In practical applications, it may be necessary to continuously optimize the fusion algorithm and collaborative work mechanism to improve tracking accuracy and stability. At the same time, a feedback mechanism should be established to facilitate timely adjustments and corrections in case of deviations during the tracking process.

The recognition capability of LiDAR is closely related to the reflectivity of objects. Reflectance refers to the percentage of incident radiation energy that is reflected by an object. Different objects have different reflectances, which mainly depend on the nature of the object itself, such as the wavelength of the electromagnetic wave and the angle of incidence.

When LiDAR detects a target object, it emits a laser beam and receives the reflected laser light from the object's surface. The reflectance of the object determines the intensity of the reflected light received by LiDAR. If an object has a higher reflectance, then LiDAR will receive more reflected light, thus improving its recognition capability for that object. Conversely, if an object has a lower reflectance, LiDAR will receive less reflected light, and the recognition capability will decrease accordingly.

Therefore, the recognition capability of LiDAR is closely related to the reflectance of objects. In practical applications, to improve the recognition capability of LiDAR, one can choose objects with high reflectance as targets, or increase the emitted laser power and optimize the focusing of the laser beam to enhance the intensity of the received reflected light, thus improving the recognition capability.